What is cloud native – and what does it mean to develop software for the cloud? Find out what makes software teams life very different in cloud world. What is so special about cloud software?

Is there anything special at all? If ‘cloud’ would only mean booking a virtual server and installing the application there – then the answer would be no. However this would only leverage a small part of the what-is-possible. Hidden within the term “cloud native” is the assumption that you want to realize on-demand scalability, flexible update capabilities, low administration overhead and be able to adapt infrastructure cost dynamically to performance demands – just to name a few. Sounds wonderful… however these things won’t come all by themselves. Your software needs to be designed, built and deployed according to…

The cloud-rules-of-the-game

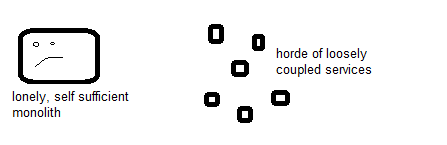

Huh, what’s this? First of all don’t get me wrong: there is nothing bad about having a monolithic application residing on a single server or cluster – and from development perspective this may be the simplest way to realize it. However this monolith may get difficult to extend over time without side effects, it may be difficult to set it up for high availability and to scale it with growing number of users, data, or whatever may be the reason for your scaling needs. And it may be hard to update your monolith to a newer version without impacting the users during the update.

Now consider the same application based on what is called a microservice architecture: the monolith gets split up into smaller, decoupled services that interact with each other as providers and/or consumers of stable API’s.

What makes microservices well suited for cloud applications?

Let’s assume that each service may exist not only once but with multiple instances up and running simultaneously. And let’s assume the consuming services are fault tolerant and can handle situations where a provider service doesn’t respond to a call. Wouldn’t that be cool? The overall system would be robust, and it would be very well suited to run on cloud infrastructure.

- Because now if service xyz is starting to become a bottleneck you can simply create more instances of that service to handle the extra load. Or even better, this would happen automatically according to rules that you have configured up-front. This approach is called “scaling out” (compared to the old-school “scale-up” approach where you would get a bigger server to handle more load).

- Next up imagine that you need to update service xyz to a newer version. One way of doing this would be to create additional service instances with the new version and remove the old ones over time, an approach called “rolling update“.

- Or you decide to add a new feature to your application. Since only 2 of your 5 services are impacted you will only need to update these 2. Less change is easier to handle and means less risk.

Getting microservice architecture right is not easy, but once you have it the advantages are huge. Note that microservices architecture as such has nothing to do with the cloud as such. However both go very well together because in a cloud environment you need to be prepared to micro outages of single services anyway. You don’t really control the hardware any more, at least you shouldn’t want to. In order to be cost efficient with a cloud approach each service should be enabled to run on commodity infrastructure. From that take and pay for as much as required.

Where will your services live? – serverless vs. containers

For hosting your workers or compute loads consider a serverless approach over a VM or container based one. This basically means that you only write the service code as such and determine when and how the logic will be triggered for execution. All the rest is handled by cloud infrastructure. At Amazons AWS this technology is called Lambda, Microsoft named it Azure Functions, Google calls it Cloud Functions. The principle is always the same. There’s no virtual machine any more, not even Docker or Kubernetes containers – means less things to manage and look after, means less operations and maintenance effort. And you only pay for the execution time. If nothing happens there’s no cost. If you suddenly require high compute performance your serverless approach will be scaled automatically – if you have done your architecture homework. Serverless will e.g. require that your services are stateless, means whatever information they need to keep between two executions of the service must be stored externally, e.g. in a cache or database service. As with microservices the advantages need to be earned. Serverless will not solve all problems, but make sure your team (at least the architect) understands the concepts and can make informed decisions about its use.

Other game changers in cloud world

What else has changed in a world where dedicated physical servers seem to have disappeared? Very trivial things need to be handled differently.

- There’s no local hard drive any more. If your service runs in a VM or Docker container it may feel so, but remember: in cloud world machines are cattle. A VM/container may die or disappear and will be replaced by a new one. Bad luck if you had data on a local drive of that machine. Now you’ll need to think about alternative ways for storing away your data or settings. In cloud world you may want to use a storage service for that purpose, a central configuration service, a database, environment variables… the choice depends as always on the requirements. Make sure the team knows the available cloud building blocks.

- If you are a Microsoft shop: there’s no registry any more. See above comments.

- For logging there are no local files any more. These would anyway not make much sense in a world where services are distributed. You’ll rather send log output to a central logging service. That will consolidate all logs of the various services in one central place, making troubleshooting much easier. There are many open source solutions for logging, our you may just use the one your cloud provider provides.

- And finally the term infrastructure will get a whole new meaning in cloud world. Infrastructure still exists, but now it needs to be managed very differently. You should strive to set it up automatically, based on scripts that you can re-run any number of times. This is crucial because you will need more than one cloud system. At least you should have one for development and testing which is separate from the real one – your production system. The two environments should be as identical as possible, otherwise your test results are not meaningful and you will chase after phantom problems that are just caused by some infrastructure misconfiguration. Those scripts will set up your required cloud resources. Means you describe the infrastructure in code just as your service logic. Infrastructure as Code (IaC) is the term for that. Check out this article for more.

So what is Cloud Native after all?

Hopefully it became clear that software needs quite a few specific considerations to feel comfortable in cloud world. Which is the reason why “lift and shift” for existing legacy software is a valid approach, but won’t leverage the full cloud potential. In order to run efficiently in the cloud, software must be designed for that purpose – this is the meaning of cloud native – software that is architected and optimized for a cloud environment. For legacy software that usually means: for efficient shifting to the cloud major refactoring or even a complete rewrite may be required.

So welcome to cloud world. Tremendous power and flexibility is at your disposal. However you’ll need to architect your software with the cloud and its building blocks in mind. Decompose your application into services. Consider serverless approaches to reduce operation effort and improve scalability and availability. Focus on your domain knowledge and your specific value-add. For the basics use existing building blocks wherever it makes sense.

Check out these related articles:

- What does cloud mean? When and when not to go “Cloud”

- IaaS vs. SaaS – where will your software live?

- What is DevOps? Why developing for the cloud changes a dev teams life

- Cattle or Pet – what IaC means and why you shouldn’t use admin-UI’s