Why manually installed servers are like pets

Before we look at new approaches let’s see how IT infrastructure was managed in the past. Life of any IT system usually started with basic server setup. Joe the admin would plug in the new hardware, configure hard drives and network and then install the operating system. Then on top of that whatever software or applications were required. He would do that manually via scrips that got adapted to the new infrastructure because names, IP addresses etc. would have to be changed for each new system. Then Joe would check if everything worked, maybe fine tune and add whatever was required before putting the shiny new machine to production. In case of a problem he would troubleshoot it and correct his setup. And over time Joe would take care of his machine. Patch it with newer versions of the OS and system software. Look after backups. Maybe extend disks or memory. The server would be like Joe’s dog – a pet.

Pets are unique

If Joe’s pet had a problem Joe would find out the cause and fix it. Maybe he would have to experiment with one or two settings. Look left and right. However, finally it always worked. But the machine would get more unique over time – more unlike any other server in the world. A pet. Much needed to happen before Joe would re-setup his beloved server. A disaster, like maybe a virus. Then poor Joe would have to go through all his setup steps again, trying not to forget anything.

Fast forward to cloud world. Remember, you don’t own the hardware any more? You basically just rent it. Or you don’t even rent the hardware but just consume services (see: IaaS vs. SaaS). In no way you can continue to handle your infrastructure as Joe did. Well –

You can do that but then the sky shall fall onto your head !

Why? First of all you probably need to set up your cloud infrastructure more than once. You will need a productive system, but you won’t use that for testing during development. So you need another environment for development. Or you may need to set up your entire infrastructure at another region, or with another provider. Or one day you may want to experiment with a new approach or run separate tests in parallel – yet another system required. It is crucial that all these systems have the exact same configuration. Otherwise be prepared for big surprises during release…

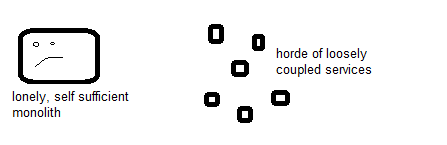

Cloud infrastructure is cattle

The only reasonable way to handle this is by automated infrastructure setup. Don’t go down Joe’s road. He could do it manually and survive because he owned the hardware – in cloud world you don’t. Yours is more like this:

A horde of cattle. Your machines and all other cloud building blocks are standardized, and there are potentially many available. They don’t have their own personality, at least they should not. If one disappears some other will take its place. Means you need to be prepared to replace it quickly and this is where automated infrastructure setup comes in. You will have scripts to set it up and to configure it without ever touching an admin UI. Your infrastructure becomes code. Infrastructure as Code: IaC.

What infrastructure as code means

People often use the term “cattle vs. pets” when talking about IaC. This goes hand in hand with the “immutable server” concept: you will never change infrastructure configuration once it is in place. Consider it as immutable. Instead fix your IaC code and run it again to set up a new machine. Delete the old one. This is fast and reliable and always gets you to the exact same state – 100% guaranteed. You can do this as often as required, and in the end you have a piece of (IaC) code that is tested and can be reused whenever required later on. You will probably have parameters that are specific to a certain environment, like e.g. the URL’s for your development and your productive system. Make sure to keep them separate from your IaC code. You want to run the identical code for each variant of your system. This is how you ensure they are all identical. IaC also solves another challenge: manual changes to your production system. Consider these as “high risk” activity – don’t do it. Instead, pre-test and run your updated IaC code. This will reduce chances for human errors, ensure reproducible results and at the same time provide full traceability of the production changes.

How to write IaC code

How would you manage and run this IaC code? Since it’s code you will have it under version control and you should execute it via a build pipeline – see this article on tooling. And how exactly would you write the IaC code? There are several approaches. The large cloud providers each have their own standards like e.g. CloudFormation for AWS or ARM Templates for Azure. Or take a look into Terraform which has some nice additional features. If you need to automate the setup of individual virtual machines (which you should avoid, consider serverless instead) then Puppet, Chef or Ansible are probably the most popular options.

Check out these related articles:

- What does cloud mean? When and when not to go “Cloud”

- IaaS vs. SaaS – where will your software live?

- Cloud Native ? What it means to develop software for the cloud

- What is DevOps? Why developing for the cloud changes a dev teams life